Suppose a prospect asks ChatGPT: “What is the best outreach tool for small B2B teams working with HubSpot?” Within a second, a shortlist appears. No SERP. No ads. No endless blog scrolling. Just a recommendation.

And this happens far more often than most marketers realize. I regularly see prospects discover my SaaS clients through ChatGPT without ever opening Google.

That shifts the core question from “Am I on page 1?” to “Do I appear in the shortlist?” For many SaaS companies, the answer is no. Not because the product isn’t good, but because AI models cannot clearly understand or describe it.

In this guide, I’ll show you how to fix that based on what I’ve seen work across dozens of SaaS companies.

Contents

TL;DR

- AI assistants like ChatGPT and Perplexity are replacing Google as the starting point for SaaS buyers: they generate an immediate shortlist instead of a list of blue links.

- Visibility is no longer about ranking, but about being clear, factual, and consistent enough for AI models to mention you.

- You increase that visibility by building an AI-friendly content architecture: best-of and use-case pages, honest comparisons and alternatives, short stats/quotes AI can extract, strong founder positioning, real FAQs, structured data, press mentions, machine-readable YouTube transcripts, authentic reviews and LinkedIn pulse content.

In short: SEO gets you into Google; structure + clarity + trust signals get you into LLM shortlists.

How AI Models Really Search: Query Fan-Out, RAG, and Context Matching

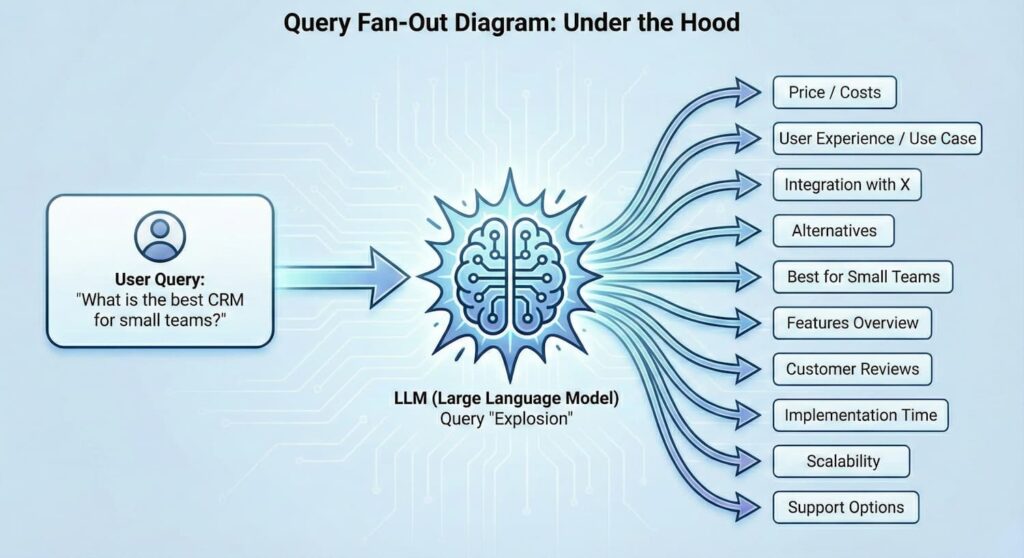

When someone asks an AI model a complex question, four things happen under the hood and together they determine which SaaS tools end up in the shortlist.

1. The model dissects every nuance in the question

From a single sentence, an LLM can infer:

- the user’s role

- team size

- tech stack

- budget constraints

- intent (research vs. evaluation)

- the use case

- limitations or conditions

This interpretation drives everything that follows.

2. The model generates dozens of micro-queries (query fan-out)

Instead of running one search, the model creates a swarm of intent-specific queries:

- “cold email tools under $200 for HubSpot users”

- “best cold outreach software for agencies using Close CRM”

- “deliverability-focused outreach platforms for small teams”

- “budget-friendly alternatives to [competitor X]”

This is why you should never write for a single keyword.

You write for hundreds of intent variations, most of which will never appear in a keyword tool.

3. The model actively retrieves evidence via RAG

Modern AI assistants like Perplexity, ChatGPT Search, and Google AI Overview use RAG: Retrieval-Augmented Generation. That means the model doesn’t rely solely on internal knowledge; it pulls live fragments from the web to support its answer.

It strongly prefers information that is short, factual, and verifiable: a concise quote, one-sentence statistic, clear definition, or FAQ-style answer.

These fragments are easy to extract and safe for AI to repeat. They often become the building blocks of the final answer.

That’s why quotes, stats, and extractable facts work so well in an AI-first content strategy: they match exactly what RAG systems look for and trust.

4. The model filters based on clarity and reliability, not ranking

Before generating a recommendation, the model evaluates whether a source is safe to use. It checks for:

- Extractability: HTML, bullets, headings, tables

- Consistency: Are the same facts repeated elsewhere?

- Neutrality: No promotional language

- Third-party confirmation: Reddit, G2, press releases, documents

- Reliability: No conflicting prices or claims

- Recency: Is the information up to date?

AI behaves less like a search engine and more like an evaluator: it “opens your file,” checks whether your story is coherent, looks for external confirmation and only then decides whether you belong in the shortlist.

Why the Shift to AI Search Is a Huge Opportunity for SaaS

For years, SEO was an uphill battle for startups: large domains with strong authority almost always won. AI models are far less hierarchical. They reward:

- clarity

- consistency

- corroboration

- contextual relevance

This means SaaS teams that focus on structured, clear, externally validated content can compete within months, even against companies that dominated SEO for a decade.

For the first time in a long time, the sharpest explanation wins, not the biggest domain.

Start by Researching Who AI Recommends Today

Before rewriting anything, you need to understand how AI models currently interpret your category. ChatGPT, Perplexity, and Google AI often reveal which sources they use, which tools they recommend, and which arguments they rely on.

Equally important: discover what questions your prospects actually ask.

AI surfaces these too and they often resemble “People Also Ask” patterns the Google search results.

Find real buyer questions through:

- Perplexity autosuggest

- ChatGPT prompt trail suggestions

- Google AI Overviews + PAA

- AlsoAsked, AnswerThePublic, Ahrefs

- Conversation logs from your own sales team, support, and customer success: one of the most underestimated sources for this is your own organization: sales, support, and customer success deal with the questions that AI will later have to answer on a daily basis.

Combine these, and you get a clear picture of the questions and criteria AI will use to include (or exclude) your product.

When you manually review AI’s answers, you’ll see patterns SEO tools never reveal:

- which competitors appear consistently

- in what contexts (use case, budget, team size, stack)

- which sources dominate (Reddit, G2, YouTube, product docs)

- which content types get cited (best-of, comparisons, alternatives, walkthroughs)

- which outdated arguments AI keeps repeating

This gives you not just who is visible, but why.

It also shows you exactly where to optimize: missing use cases, outdated listings, overly promotional content, weak documentation, inconsistent product descriptions, etc.

A Brief Practical Observation (Why This Is Not Theory)

In my work with B2B SaaS teams, this shift is already visible. Prospects increasingly say things like:

“I asked ChatGPT for the best tools for X, and you came up.”

What’s interesting is that this often happens with companies that already had strong SEO fundamentals because they unintentionally created the kinds of pages AI loves: clear explanations, factual content, comparisons, use cases, consistent terminology.

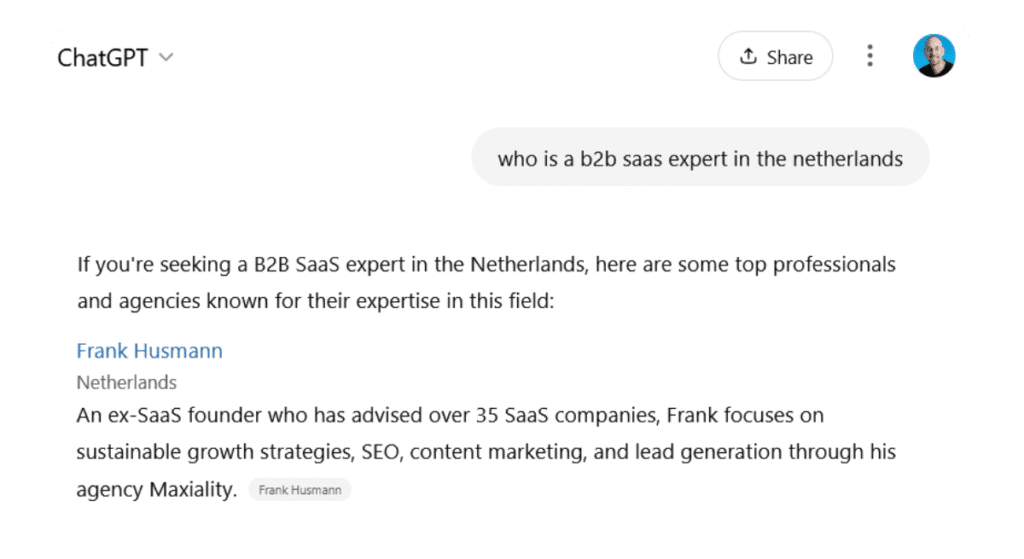

I see the same in my own field: ask an LLM about B2B SaaS marketers, and I often appear in the results. Not because I actively optimize for it, but because my content uses consistent patterns, clear positioning, and repeatable language. And that’s precisely what AI models rely on.

AI isn’t rewarding new behavior. It’s finally rewarding what has always mattered: clarity, structure, and consistency.

How to How to create content for visability in ChatGPT, Perplexity, Claude, and Google AIContent

To be visible in AI models, you must meet two conditions: first, AI must understand what you do, and that happens through the content on your own site. Then, AI must also dare to recommend you, and to do that, it looks at what others say about you through external sources. That confirmation determines whether a model includes you in a shortlist.

So: first you build understandability (internal signals), then trust (external signals). Both are necessary to stand out in ChatGPT, Perplexity, Claude, and Google AI.

Part 1: Create AI-friendly content on your own site (internal signals)

1. Build an ecosystem of “Best-of” and “Best Tool for [Use Case]” pages

Many marketers will think: “But haven’t we always done this in SaaS SEO?” In fact, you can find this in my article on SaaS SEO. The only difference is in the function. In the past, you wrote best-of pages to capture traffic via Google; now, those same pages serve as reference material for AI models.

They use this type of content to understand categories, recognize criteria, and logically compare solutions. What used to be pure bottom-of-funnel content now becomes input for how a model defines your market and which products it recommends within it.

AI models respond especially well to pages like:

- “Best [category] software”

- “Best tools for [use case]”

- “Which tool is right for…?” scenarios

Not because they want to rank these pages but because these pages help them understand the structure of a market.

People don’t ask: “Tell me about CRM software.”

They ask:

- “What’s the best CRM for small remote teams?”

- “What’s the best alternative to HubSpot under $200?”

- “What are the best low-touch onboarding tools for B2B SaaS?”

These questions map exactly to the queries AI models must answer.

When your best-of pages are structured with:

- clear criteria

- neutral descriptions

- comparison tables

- scenarios where each tool fits or doesn’t fit

…they become top candidates for AI citation.

They act as anchor points for the model’s query fan-out: a central hub where dozens of micro-queries converge.

Important: They don’t need to be commercial. They need to be informative.

Include competitors, be honest about trade-offs that’s what AI trusts.

2. Create a library of use-case pages that AI can match to intent

Many teams assume they already cover this because they have product or feature pages. But AI models look for something different: situations, not features.

SaaS rarely sells “a product”, it sells use cases.

Examples:

- onboarding automation

- outbound workflows for agencies

- lead scoring for small teams

- reporting for PLG companies

- multichannel outreach for SDR teams

AI actively tries to match user prompts to recognizable scenarios like these.

If your website does not explicitly describe these contexts, AI cannot map your solution to real-world problems and it simply won’t recommend you.

To fix that:

- create one page per use case

- describe the problem, audience, workflow, limits, and outcomes

- write as if you’re helping AI make the correct recommendation

These pages often appear verbatim in AI-generated responses because they deliver the one thing LLMs crave: contextual clarity.

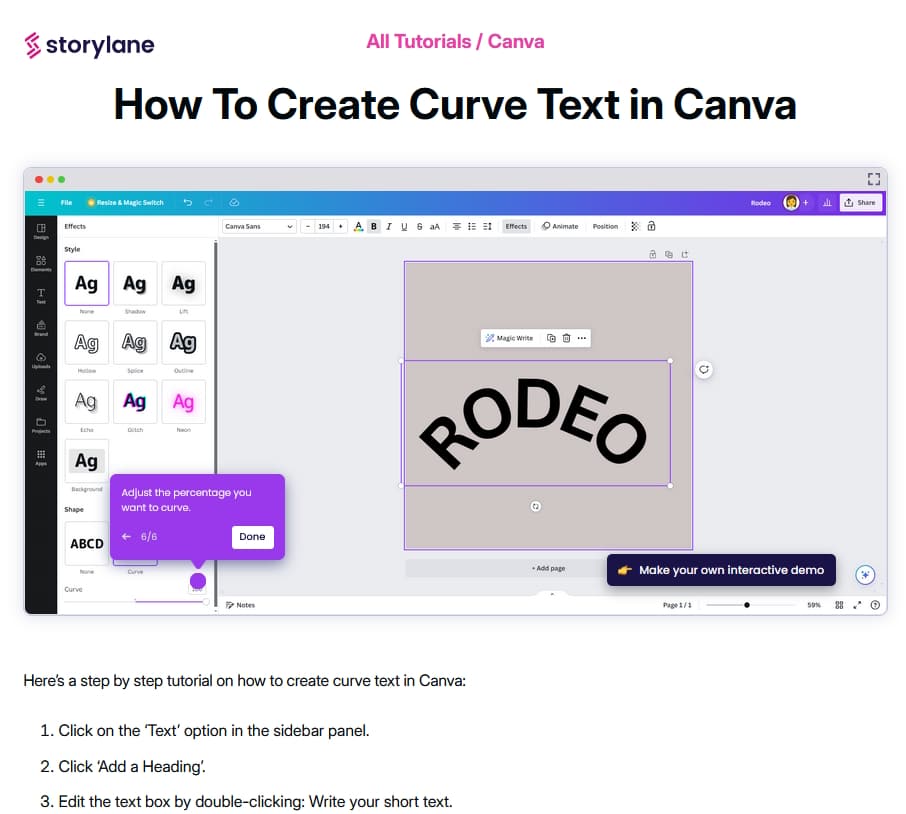

A practical example: Use-case pages as real workflows for AI Search

A useful way to think about use-case content is to look at how some companies document real workflows instead of only describing features. Storylane, for example, creates interactive demos that walk buyers through specific tasks such as how to curve text in Canva or how to connect Salesforce with Jira. Their approach resulted into 787 demo pages ranking directly in AI-driven queries (source).

This type of content works well for AI Search because it gives models something they cannot generate themselves: actual product behavior. AI can describe what software does, but it cannot simulate logging in, clicking through a workflow, or interacting with real UI states.

By publishing pages that show how a tool solves a concrete use-case problem, you give AI (and buyers) a clear, unambiguous example of the use case you want to be associated with.

3. Build comparison pages that are more honest than your competitors would ever dare

Comparison pages used to be persuasive assets.

In an AI-first world, they become explanatory assets.

Their job is no longer to convince people but to teach AI how solutions differ.

AI does not trust exaggeration or vague marketing claims.

It wants:

- clear differences

- trade-offs

- target audience distinctions

- explicit use-case fit

- real strengths and real weaknesses

A comparison should answer:

- Where are you stronger?

- Where is the competitor stronger?

- Which type of team is better suited to which tool?

- When is your product not the best choice?

That last one is essential: it signals objectivity, which AI values deeply.

Interestingly, many comparison pages don’t rank well in Google, yet they are cited in AI answers, precisely because they’re semantically rich and neutral.

4. Publish “Alternatives to [Competitor]” pages that AI can cite

Alternatives pages are not new to SaaS marketing but their role has fundamentally changed.

Previously, these pages existed to capture long-tail search queries like “[X] alternative.”

Today, AI models use them to:

- define categories

- map similarities between solutions

- understand positioning

- group products logically

They are read less by humans and far more by models trying to understand your market.

These pages are extremely impactful because users in AI systems constantly ask for alternatives:

- “What are the best Instantly alternatives?”

- “What’s similar to Asana but simpler?”

- “What are the best Outreach alternatives?”

AI views well-written alternatives pages as:

- context-rich

- comparative

- neutral

- evidence-based

- ideal for citation

If you write them factually, not as sales copy so they become authority magnets inside AI ecosystems.

| Phase | Aware of | Content Type | Title |

|---|---|---|---|

| Bottom | Product | Page | [Your SaaS] alternative |

| Page | [Your SaaS] competitor | ||

| Page | [Your SaaS] vs [competitor] |

5. Add short quotes, figures, and source references

AI models frequently quote compact, verifiable fragments: a single sentence with a statistic, a short quote, or a clear source reference. GEO research shows that these fragments can increase visibility in generative AI answers by up to 40% (source), simply because they’re easier for models to extract and reuse.

For SaaS companies, this means your content must include “hooks” that AI can confidently lift:

- a clean statistic

- a concise insight

- a referenced fact

- one or two lines of proprietary data

These micro-facts improve both authority and quotability.

This works especially well when the data comes from:

- your own benchmarks

- product metrics

- customer results

- reputable third-party sources

Keep these fragments short: most LLMs only quote one or two sentences at a time.

In short: the more compact and verifiable the fact, the more likely AI is to cite it.

6. Founder positioning: why AI needs to learn to describe your brand differently

AI models often describe SaaS tools in the same generic terms, unless you actively provide language that distinguishes your product. This means that you must explicitly incorporate the founder’s vision, the real positioning, into your content. Not as marketing talk, but as consistent language that AI models can recognize and repeat.

For example: Without detailed positioning, AI sees you as “a project management tool.”

With integrated founder language, that becomes: “a tool designed for remote teams that focuses on asynchronous collaboration.”

That precision doesn’t happen on its own. You achieve this by:

- capturing the distinctive value proposition in founder words,

- weaving 3–5 core messages throughout all your content,

- repeating that same language in help docs, comparisons, and FAQs,

- regularly checking how AI models describe your product,

- and adjusting when their description deviates from your positioning.

The result: AI doesn’t reproduce what you do, but what makes you unique.

This is exactly how we’ve created content for years: through a one-hour monthly founder interview, we generate blogs, LinkedIn posts, YouTube videos, and newsletters. It’s the foundation of our Authority Accelerator process.

7. Add real FAQs to your important pages

FAQs work exceptionally well in AI Search.

Not just because of structured data but because AI models can easily extract and reuse question–answer fragments.

Every query to an LLM triggers dozens of microquestions:

- “Does this work with HubSpot?”

- “What’s the pricing structure?”

- “What alternatives fit small teams?”

A good FAQ directly answers these micro-intents.

FAQs are powerful for AI because they are:

- short

- factual

- neutral

- semantically rich

This is exactly the type of information AI is confident quoting.

Add FAQs to your:

- product pages

- use-case pages

- comparison guides

- alternatives pages

- even your blog posts

Use real questions prospects actually ask, and keep answers crisp.

FAQs aren’t just helpful for users, they’re one of the most efficient ways to help AI describe your product accurately and completely.

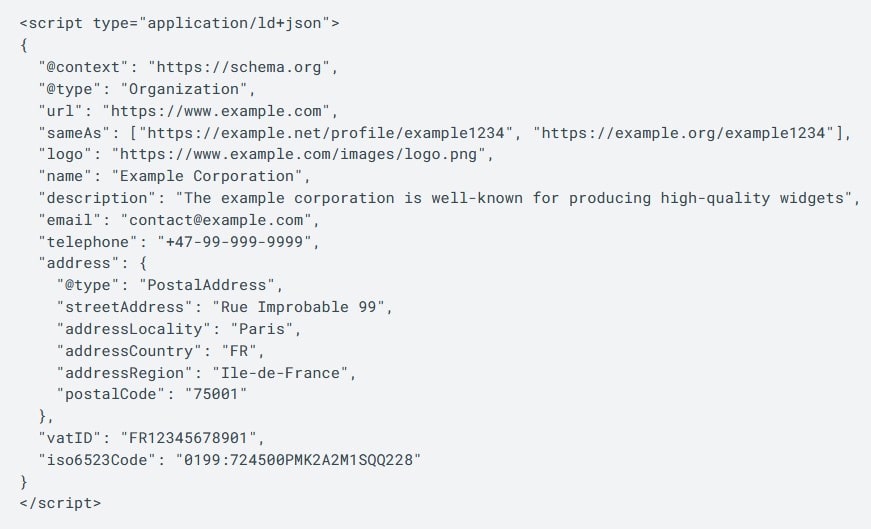

8. Add structured data so AI can interpret your content more accurately

Schemas such as:

- SoftwareApplication

- FAQPage

- Organization

- Product

- Review

don’t just help with classic SEO, they help AI models interpret your content rather than merely read it.

Structured data is to AI what subtitles are to video:

it makes everything more understandable, reliable, and easier to process.

If your category is competitive or ambiguous, structured data often becomes the difference between:

- AI “kind of guessing” what your product does

vs. - AI confidently placing you in the correct shortlist

Think of schema as the metadata layer that ensures models actually understand the meaning behind your content.

Part 2: Build AI trust with citations outside your own site (external signals)

These are the signals that AI uses to check whether your story is accurate. Not because you say so, but because the internet confirms it.

1. Use press releases to strengthen your online entity

Press releases are a forgotten weapon in the AI era, but AI models love them. Why? Because press releases are:

- factual

- consistent

- widely distributed across authoritative domains

- written in clear, structured language

- unambiguous about products, features, pricing, and integrations

A good press release helps AI with entity resolution: building a coherent, unified understanding of what your product is and how it fits into a category. This is especially useful if:

- your messaging is inconsistent across the web

- outdated information still circulates

- your product has recently evolved

- your competitors dominate directory listings

The goal of press releases today isn’t media attention. It’s AI trust-building.

2. Add your SaaS to best-of articles on websites

One of the best ways to raise awareness of AI is to appear in best-of lists, such as “Top 10,” “Best tools for…” and “Best alternatives to…” articles that can be found all over the internet. So make sure your SaaS is included in these lists. You can find these lists by searching Google.

When compiling recommendations, AI models rely heavily on structured, scannable sources because users trust ranked, well-organized lists more than dense or unstructured text. AI systems seem to prefer the same clarity when retrieving and synthesizing evidence.

These lists form the external validation layer that AI models use to determine whether your product deserves a place on a shortlist.

3. Produce YouTube videos that function as “machine-readable knowledge bases”

AI models read YouTube transcripts as if they were long-form blog posts. This makes video far more valuable than most SaaS teams realize. Videos and especially short, practical ones contain exactly what AI struggles to extract from traditional written content:

- concrete steps

- real screens

- real workflows

- natural language

- specific terminology

- contextual details

This makes transcripts semantically rich sources that AI loves quoting and referencing.

The formats that tend to work best:

- Workflow walkthroughs

“How to set up an outreach campaign in 5 minutes with our tool” - Use-case demonstrations

“How small teams improve pipeline discipline with X” - Integration explanations

“How to connect our product to HubSpot or Close CRM” - Neutral comparisons

“When to choose X, when to choose Y”

Because almost no SaaS companies do this, the upside is enormous. A simple 3–5 minute walkthrough can outperform a 3,000-word blog post in AI visibility because the transcript contains so many “understandable” details.

Also read: videomarketing for SaaS

4. Provide visible, real human reviews (Reddit, G2, Capterra)

For an AI model, platforms like G2 are not marketing. These platforms are structured, verifiable input.

Since AI cannot test products itself, reviews become essential signals for:

- authenticity

- sentiment

- risk assessment

- reliability

- user context

- variation in feedback

Reddit is especially influential. When users discuss products in relevant threads, AI often treats these comments as human-grounded truth.

Participating genuinely and not promotionally in these discussions strengthens your credibility.

G2 and Capterra add another layer: they are centralized sources with standardized review formats. AI can easily extract and reuse this data.

Good reviews give AI not just information, but confidence.

5. LinkedIn is now the #2 source for AI citations (and Pulse plays a key role)

Recent studies show that AI models increasingly derive their confidence from platforms that are directly linked to real people with verifiable expertise. A large-scale SEMrush analysis of 230,000 prompts (ChatGPT, Google AI, and Perplexity) shows that LinkedIn is now the second most cited source in AI responses, right after Reddit.

Research by Spotlight also confirms this: AI tools cite LinkedIn sources 4 to 5 times more often than a year ago. Notably, more than 78% of all LinkedIn citations come from LinkedIn Pulse articles. In other words, LLMs structurally view long-form content directly linked to a personal profile as more reliable than anonymous blogs or generic content websites.

Why? Because LinkedIn Pulse articles provide AI with multiple verification signals at once: a recognizable author, a professional history, niche expertise, and content consistency. AI does not have to “guess” whether a source is reliable: the entity person + subject is correct.

For SaaS companies, this means that LinkedIn is no longer just a distribution channel, but a citation source for AI search. You can do this by having founders or subject matter experts systematically answer questions that buyers ask AI (“How do you evaluate X?”, “When does Y fail?”, “What are real alternatives to Z?”), increasing the chance that your brand or perspective will literally be built into AI answers.

This makes LinkedIn Pulse an accessible alternative to Reddit: less anonymous, easier to control, and directly linked to your brand.

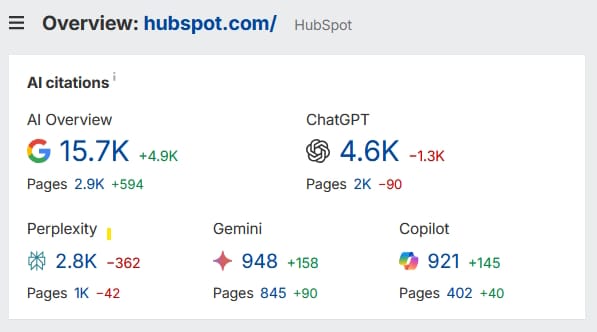

How to Measure Whether AI Visibility Is Actually Working

AI Search requires different metrics than traditional SEO. You don’t look at positions, but at presence: how often does your product appear in AI answers within your category? That’s your practical share of voice – not as a competitive scoreboard, but as an indicator that AI recognizes your product and finds it relevant.

Equally important is the nature of the mention. Are you only mentioned as “another option,” or does AI provide context about your strengths, typical use cases, or price level? That difference says more about the quality of your information than about your visibility.

Because AI traffic often comes in indirectly – first via a recommendation, later via branded search or direct navigation – attribution is less about click behavior and more about recognition. You can see this in three places: an increase in brand searches (brand lift), higher-quality inbound leads, and answers in onboarding such as: “I came across you in ChatGPT.”

The key is simple: Don’t measure whether AI ranks you “at the top” because that concept doesn’t exist.

Measure whether AI understands you, can explain you, and is willing to mention you.

Tip 1: We always measure brand lift using a Looker Studio Dashboard based on Google Search Console (GSC) data. In GSC, you can see exactly how many clicks your brand receives in Google’s search results.

Tip 2: Add an open text field on all your lead forms: “How did you find us?” You’ll start seeing “ChatGPT,” “Perplexity,” or “Google AI Overview” far sooner than you expect.

Do You Need Tools to Measure AI Visibility?

You don’t need a heavy analytics platform. Most of what matters starts with manual check-ins:

- Ask ChatGPT and Perplexity the questions your prospects ask.

- Note which tools appear, in what order, and with what reasoning.

This is often more revealing than any dashboard.

There are emerging tools such as AI Share-of-Voice trackers and LLM citation monitors and they help identify trends over time:

- Who AI mentions

- How often

- Based on which sources

But they do not replace manual research.

They just speed it up.

Tools are useful but the foundation remains the same:

AI will only understand and describe you well if your information is accurate, consistent, and aligned everywhere.

FAQ

Aren’t best-of and comparison pages just old SEO tactics?

It’s true that SaaS marketers have created these pages for years.

The difference is not the format, it’s the reader.

Originally, they were designed for humans searching Google for shortlists.

Today, those same pages are used by AI models to understand:

- how your category works

- which solutions are similar

- what differentiates you

They are no longer conversion pages, they are documentation sources for AI.

What looks the same on the surface has undergone a fundamental shift:

from capturing traffic to delivering meaning.

What’s the difference between AI Search, GEO, AIO, and LLM SEO?

- AI Search: searching via ChatGPT, Perplexity, and Google AI will get you answers, not links.

- GEO (Generative Engine Optimization): optimizing to be named or recommended in those AI answers.

- AIO (AI Optimization): the broader strategy of making AI understand and position your product correctly.

- LLM SEO / AI SEO: applying SEO principles (structure, entities, semantics) to how language models read and extract content.

In short:

SEO is for Google.

AIO ensures AI knows who you are.

GEO ensures AI mentions you when it matters.

Do you need separate optimization strategies for ChatGPT and Perplexity?

Not separate but you must understand their differences.

- ChatGPT relies heavily on Bing’s index

→ prefers clean structure, FAQs, tables, and extractable explanations. - Perplexity crawls the web directly

→ prefers deeper, more complete, more factually detailed content.

So the practical difference:

- ChatGPT prefers easy-to-extract clarity

- Perplexity prefers thorough, well-supported depth

Good AI-first content satisfies both.

Conclusion

The rise of AI models doesn’t make the playing field more chaotic, it makes it fairer. Much of what works is not new at all: be clear, explain exactly what you do, don’t overclaim, be transparent about your strengths and weaknesses, and make sure others can confirm your story.

The only difference is that your explanation now has to make sense not only to people, but also to systems that try to interpret that explanation. Companies that take this seriously don’t build an “AI strategy,” but simply a better foundation for their positioning. That may be less spectacular than many people suggest, but it’s exactly what works.